Big Data: Frequently used HDFS commands in Real Time

Posted on by Sumit KumarHDFS commands

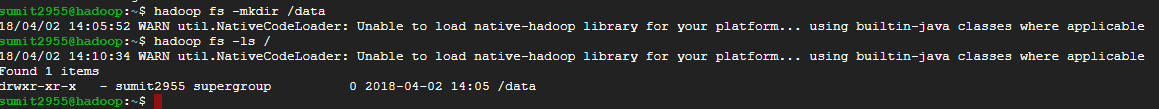

1)mkdir (Create a directory)

hadoop fs –mkdir /data

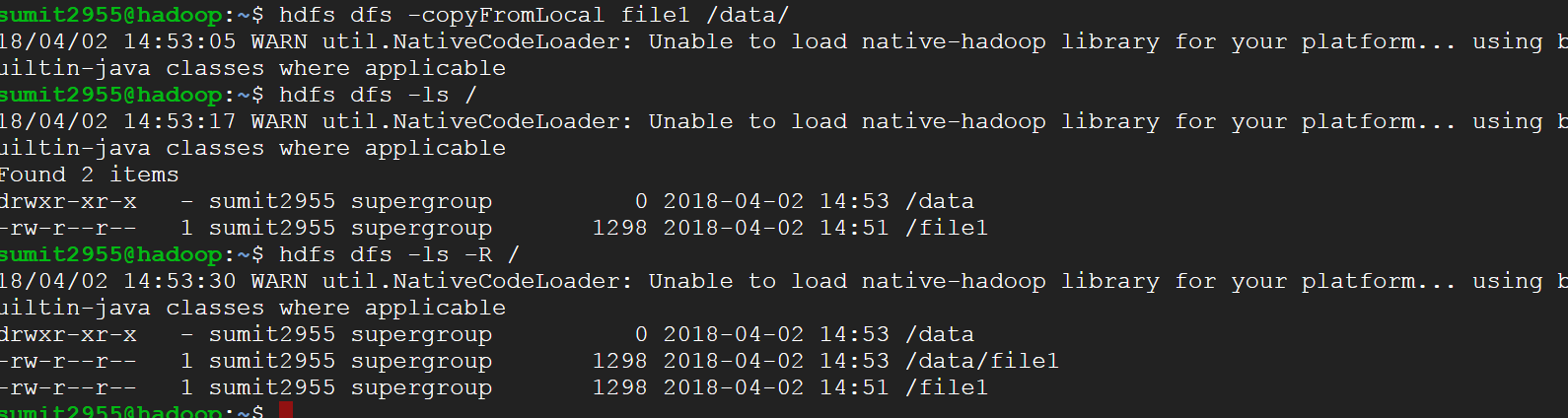

2)copyFromLocal(Copy a file or directory from Local to HDFS)

If we want to copy file1 from local to HDFS inside directory /data then we have to use below command

hadoop fs –copyFromLocal file1 /data/

Note: Can be used for copying multiple files, similar pattern files, all the files, a directory

- moveFromLocal(move a file or directory from Local to HDFS)

hadoop fs –moveFromLocal /home/training/Local/file1 /home/training/hdfs

- copyToLocal(Copy a file or directory from HDFS to Local)

hadoop fs –copyToLocal /home/training/hdfs/file1 /home/training/Local

- moveToLocal(Not yet implemented)

- cp (copy a file from one location to another location inside HDFS)

hadoop fs –cp /home/training/hdfs/file1 /home/training/hdfs/hdfs1

- mv (move a file from one location to another location inside HDFS)

hadoop fs –mv /home/training/hdfs/file1 /home/training/hdfs/hdfs1

- put (Similar to copyFromLocal)

hadoop fs –put /home/training/Local/file1 /home/training/hdfs

- get (Similar to copyToLocal)

hadoop fs –get /home/training/hdfs/file1 /home/training/Local

- getmerge (writes multiple file contents in to a single file in Local File system)

hadoop fs –getmerge /home/training/hdfs/file1 /home/training/hdfs/file2 /home/training/Local/f3

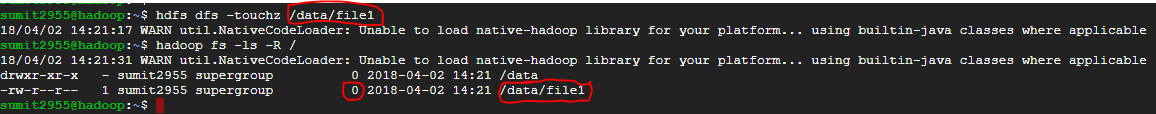

11)touchz ( can create n no of empty files in HDFS)

hdfs dfs –touchz /data/file1

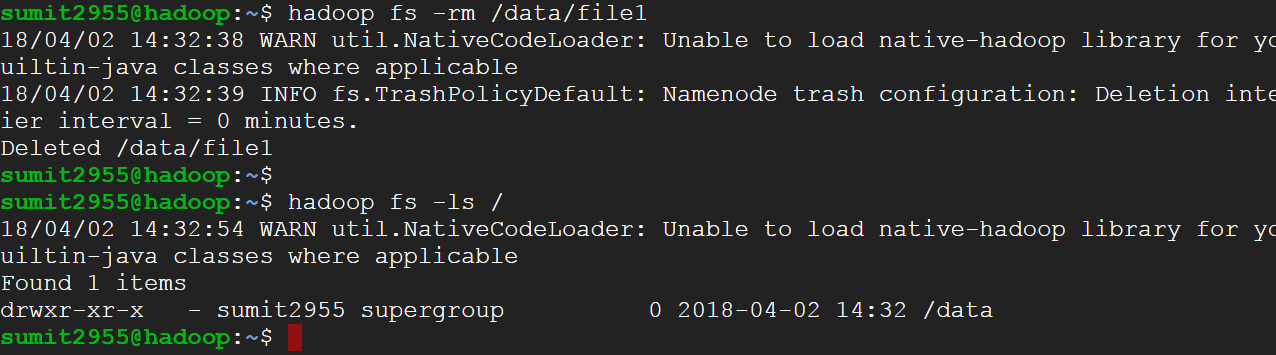

12)rm (Remove a file):-It will use to delete file in HDFS

hadoop fs –rm /data/file1

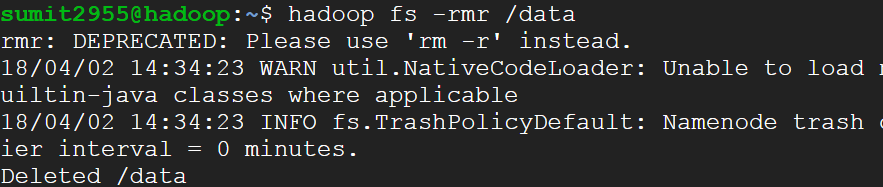

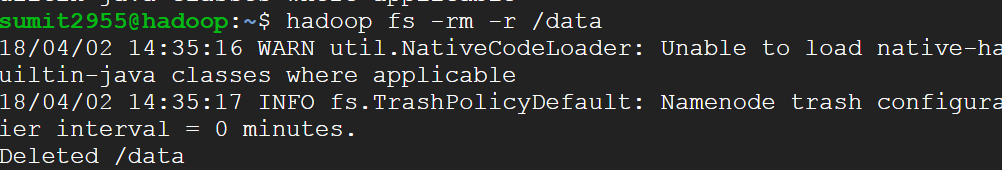

- rmr or rm -r(use to delete the directory from HDFS)

hadoop fs –rmr /data (-rmr command is deprecated in new version of hadoop)

or

hadoop fs -rm -r /data

Note: Can be used to remove similar pattern files(*.sh, *.txt etc), all the files(*)

- ls (Lists all the files & directories)

hadoop fs –ls /home/training/hdfs

- ls|tail –n (Tail option with List)

hadoop fs –ls /home/training/hdfs|tail -10

- ls|head –n (head option with List)

hadoop fs –ls /home/training/hdfs|head -10

- cat (Displays the content of a file)

hadoop fs -cat /home/training/hdfs/file

- text(Displays the content of zipped files)

hadoop fs -text /home/training/hdfs/file.gz

- cat|tail –n (Display bottom n lines of a file)

hadoop fs -cat /home/training/hdfs/file|tail 10

- cat|head –n (Display top n lines of a file)

hadoop fs -cat /home/training/hdfs/file|head 10

- cat|wc –l (Counts the no:of lines in a file)

hadoop fs -cat /user/sumit/hdfstest/file1|wc –l

- cat|wc –w (Counts the no:of words in a file)

hadoop fs -cat /user/sumit/hdfstest/file1|wc –w

- cat|wc –c (Counts the no:of Characters in a file)

hadoop fs -cat /user/sumit/hdfstest/file1|wc –c

- du (Disk Usage of a file or directory)

hadoop fs –du /home/training/hdfs

- du –h (formats & shows file or directory size in human readable format)

hadoop fs –du -h /home/training/hdfs

- du –s(shows summary of the directories instead of each file)

hadoop fs –du –s /home/training/hdfs

- df (Disk usage of the entire file system)

hadoop fs –df

O/P:

Filesystem Size Used Available Use%

hdfs://hadoop 328040332591104 102783556870823 210750795833344 31%

- df –h (Formats & shows in the human readable format)

hadoop fs -df –h

O/P:

Filesystem Size Used Available Use%

hdfs://hadoop 298.4 T 93.5 T 191.7 T 31%

- count(Counts all the Directories & Files in the given path)

hadoop fs –count /home/training/hdfs

- fsck (To check file system health)

hadoop fsck /home/training/hdfs

- fsck –files –blocks (Displays corresponding Files& their block level info)

hadoop fsck /home/training/hdfs –files -blocks

- fsck –files –blocks –locations (Displays files& block level info including the block location)

- hadoop fsck /home/training/test_hdfs/f1.txt –files –blocks –locations -racks

- setrep(used to change the replication factor a file or a directory)

hadoop fs –setrep 5 /home/training/hdfs/file1

Hadoop fs –setrep 5 –w /user/training/test_hdfs/ABC

-w It requests that the command waits for the replication to complete. This

can potentially take a very long time.

- Controlling block size at file level without changing the block size in hdfs-site.xml

Hadoop fs –D dfs.block.size=134217728 –put source_path destination_path

- Controlling replication at file level irrespective of the default replication set to 3

Hadoop fs –D dfs.replication=2 –put source_path destination_path

- Setting replication factor for a directory in HDFS

Hadoop fs –setrep 5 –R /user/training/test_hdfs/ABC

Note: All the files copied under this directory will be having a replication factor of 5 irrespective of the default replication set.

- Safe Mode

Hadoop dfsadmin –safemode leave

Hadoop dfsadmin –safemode enter

Hadoop dfsadmin –safemode get

- Delete all the files in trash

hadoop fs -expunge

- Copying a file from one cluster to another cluster

hadoop fs -distcp hdfs://namenodeA/test_hdfs/emp.csv hdfs://namenodeB/test_hdfs

Leave a Reply